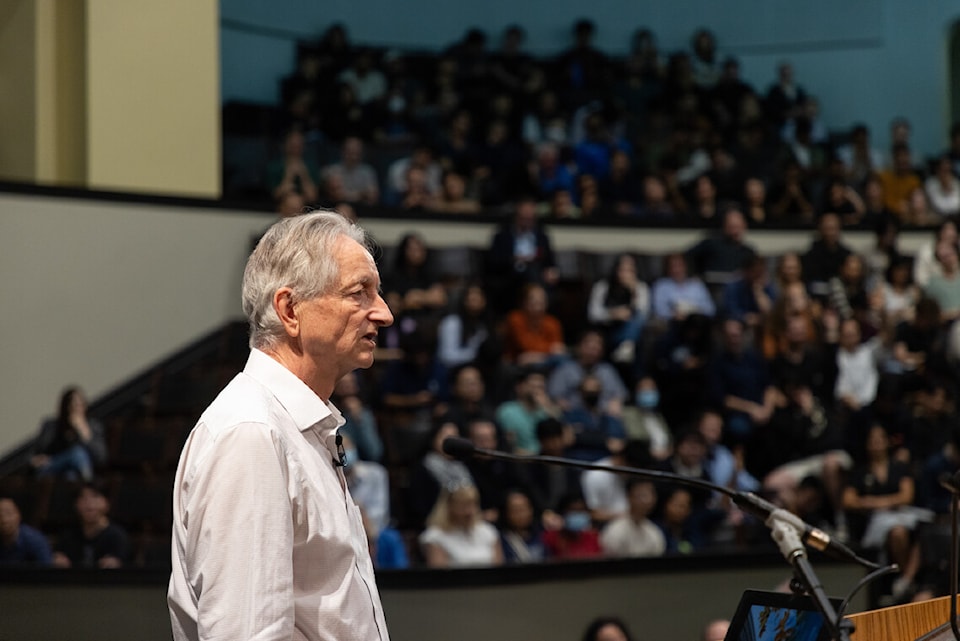

Geoffrey Hinton, a Professor Emeritus at the University of Toronto, was awarded the 2024 Nobel Prize in Physics for his work in artificial neural networks and deep learning.

Often regarded as the “Godfather of AI,” Hinton shared the prize with John J. Hopfield of Princeton University for foundational discoveries and inventions that enable machine learning with artificial neural networks.

But despite being a founding father in the field, Hinton does have concerns about where Artificial Intelligence is heading and called for more research into safety.

He was picked for the high-profile award for using the Hopfield network, established by his co-laureate, as the foundation for a new network named the Boltzmann machine, which can learn to recognize elements within a given type of data.

The Boltzmann machine classifies images and generates new examples of the pattern on which it was trained. Hinton and his graduate students expanded on this work to help lead in today's rapid development of machine learning.

The technology now supports a wide range of applications, including ChatGPT and self-driving cars.

In a media release, Hinton said he was surprised at receiving the award.

“I had absolutely no idea that I would even be nominated. I dropped out of physics after my first year at university because I couldn't do the complicated math,” he said.

Hinton acknowledged many researchers who contributed to the field over the years. He cited his late mentor, David Rumelhart, with whom he developed the backpropagation algorithm, and his fellow researcher Terry Sejnowski.

“I think of the prize as a recognition of a large community of people who worked on neural networks for many years before they worked really well,” Hinton said.

He praised the talent of his students, many of whom have made significant advancements in Artificial Intelligence.

Hinton recalled a time when many experts rejected neural networks as ineffective.

“It was slightly annoying that most people in the field said that neural networks would never work,” he said, pointing out that those experts were wrong.

Hinton said AI's future, saying that it can enhance productivity and improve healthcare. However, he also warned about the risks related to AI systems getting intelligent.

“My worry is that it may lead to bad things,” he said, urging the need for more research on AI safety.

Hinton said many leading researchers believe AI could surpass human intelligence within the next five to 20 years but acknowledged uncertainty surrounding the outcomes.

“There are very few examples of more intelligent things being controlled by less intelligent things,” he said.

“We need to think hard about what happens then,” he said, calling for more safety research to reduce potential risks.

Hinton said there is a need for large companies to allocate more resources toward safety research. He said that currently, most funding focuses on enhancing model performance rather than ensuring safety.

“The effort needs to be more than like one per cent… It needs to be maybe a third of the effort goes into AI safety because if this stuff becomes unsafe, that’s extremely bad,” he said.

Hinton questioned the direction of AI companies in terms of safety, specifically mentioning Sam Altman of OpenAI.

“It turned out that Sam Altman was much less concerned with safety than with profits. And I think that's unfortunate,” he said.

Hinton also said he has concerns about the dangers posed by AI, such as the potential for misinformation and cyber-attacks.

“Immediate risks are things like fake videos, corrupting elections... there was a 1,200 per cent increase in the number of phishing attacks,” he said, highlighting the urgent need for caution in AI's development and application.

Hinton said concerns about the burgeoning technology having a ‘‘dumbing down’’ effect on people, Hinton compared it to the introduction of pocket calculators in education.

“I don't think it will have a significant dumbing down effect,” he said. “I think it'll make people smarter, not dumber.”

Hinton said he is not suggesting slowing AI advancement.

“I've never recommended slowing the advancement of AI because I don't think that's feasible,” he said.

Hinton said AI could play a positive role in many fields, but he, in particular, cited healthcare. He predicted that AI will significantly improve diagnostic capabilities, enhancing patient outcomes.

“The combination of the doctor with the AI system gets 60 per cent correct, which is a big improvement,” he said, suggesting that AI could serve as a family doctor with vast experience from analyzing millions of cases.

Hinton's contributions have shaped the field of AI and positioned Canada as a key player in research and innovation. He said while research funding in Canada may not match that of the U.S., it is used effectively to support fundamental research driven by curiosity.

He said he thinks Canada “is doing quite well” given the resources it has.

Hinton is the fourth faculty member from the U of T to receive a Nobel Prize.